Automatic Classification of Cardiomegaly Using Deep Convolutional Neural Network

With powerful computational systems and the accessibility to a vast amount of information, artificial intelligence has achieved state-of-the-art results in many different areas, including the medical industry. Researchers have applied different types of AI methodologies to analyze data of different types, which could in turn aid medical professionals to automate the process to detect diseases and save time. Moreover, deep learning methods have been utilized to create new synthesized data samples that could solve data insufficiency or enhance the quality of existing data.

In this thesis work, completed under the supervision of PRIVASA project consortium, a deep convolutional neural network is trained to automatically detect and classify cardiomegaly or enlarged heart from chest radiograph images. In addition, deep convolutional generative adversarial network (DCGAN) models are trained to synthesize new samples of chest radiograph data. The synthesized samples are used as GAN-based data augmentation to investigate the possibility to enhance the classification accuracy. The chest radiograph image dataset, extracted from the CheXpert collection, contains images belonging to two different classes, normal and cardiomegaly.

To train the classification model, transfer-learning method is applied that utilizes VGG16, a pre-existing network architecture that consists of convolutional layers. The chest radiograph images in both classes are passed as input, where the network learns the patterns of images in consecutive training instances. After each training instance, the network parameters are tweaked based on the calculated loss to make better predictions. Eventually, the network would be able to classify images with higher accuracy.

Overfitting is one of the main challenges for training a classification model, where the model learns to fit the data points coming from the training dataset but fails to generalize on new unseen data. One of the ways to overcome this challenge is data augmentation, meaning that new instances of data are added to the training set. A standard technique to augment images is by transforming existing images into different shapes, such as flipping, zooming and color-enhancing. However, these methods sometimes lack to add diversity and for this reason, the GAN-based augmentation technique is known to be more effective.

Deep convolutional GAN consists of two subnetworks that compete, a generator and a discriminator. The generator learns the distribution of chest radiograph image pixels and attempts to produce new synthetic samples. On the other hand, the discriminator learns how to distinguish the real images (coming from the domain dataset) from the synthetic ones (produced by the generator). As these networks learn to beat each other down in consecutive training instances, the generator would eventually learn to produce plausible examples, making it impossible for the discriminator to recognize synthetic images as fake.

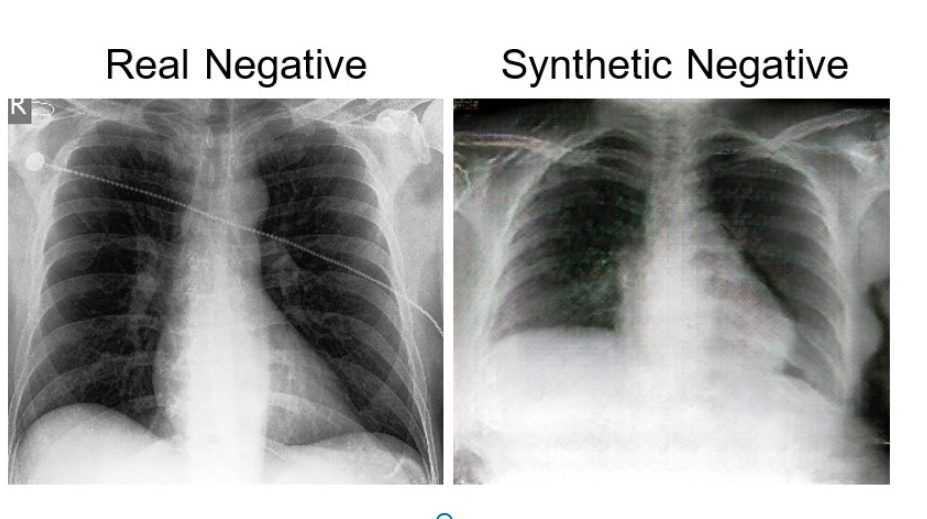

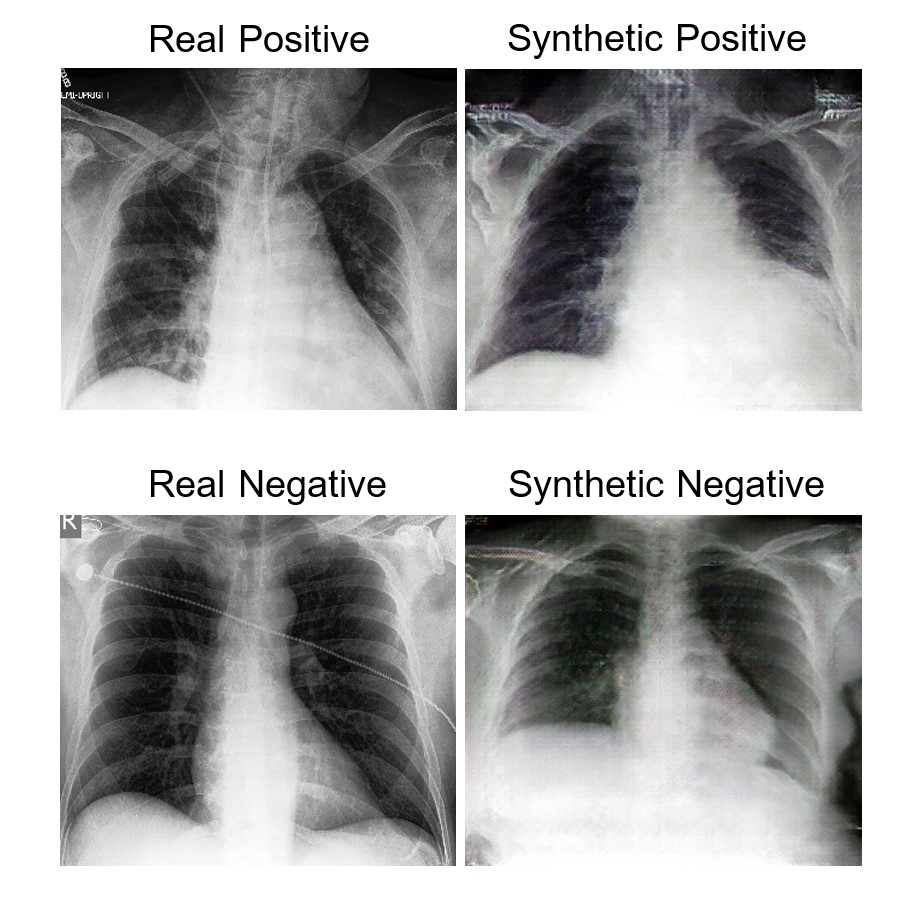

Below, examples of synthetic and real images of positive and negative classes are shown.

Training the VGG16 classification model without augmentation techniques has resulted in 77.1% accuracy to distinguish cardiomegaly and healthy chest radiograph images from each other. However, despite having adequate synthetic images, GAN-based augmentation technique did not result in any significant enhancement of the classification accuracy. This might be because of mode collapse, a phenomenon where the generator produces new samples but lacks to add diversity to the data. Furthermore, it was observed that the CheXpert image dataset consists of corrupted images, and deep cleaning of the data is needed.

Possibilities in utilizing different methods for automatic classification and the generation of new synthesized examples are endless, and thus deeper investigations are needed to improve results. A continuation of this project could attempt to train extensions of GAN models such as Progressive Growing GAN or Wasserstein GAN with chest radiograph data, which could lead to better-quality synthetic images. Furthermore, different existing network architectures could be combined for complex pattern recognition purposes in classification model training. With these ideas, the aim is to reach the accuracy level of radiology experts in detecting cardiomegaly.